In recent years, there has been a significant increase in interest in the field of AI, largely due to the emergence of ChatGPT. This has led to numerous discussions among enthusiasts from various industries about AI and its applications in their respective fields. However, as more individuals share their understanding of AI, a plethora of articles discussing different AI terms and acronyms have surfaced, making it challenging for AI beginners to grasp the relationships between various technologies and concepts in AI.

Terms like LLM, Generative AI, Neural networks, GANs, etc., frequently used by AI practitioners. It can be overwhelming for someone new to AI, to comprehend how these terms and technologies fit together. It becomes crucial to clarify where specific technologies, such as ChatGPT, fit into the broader scope of AI.

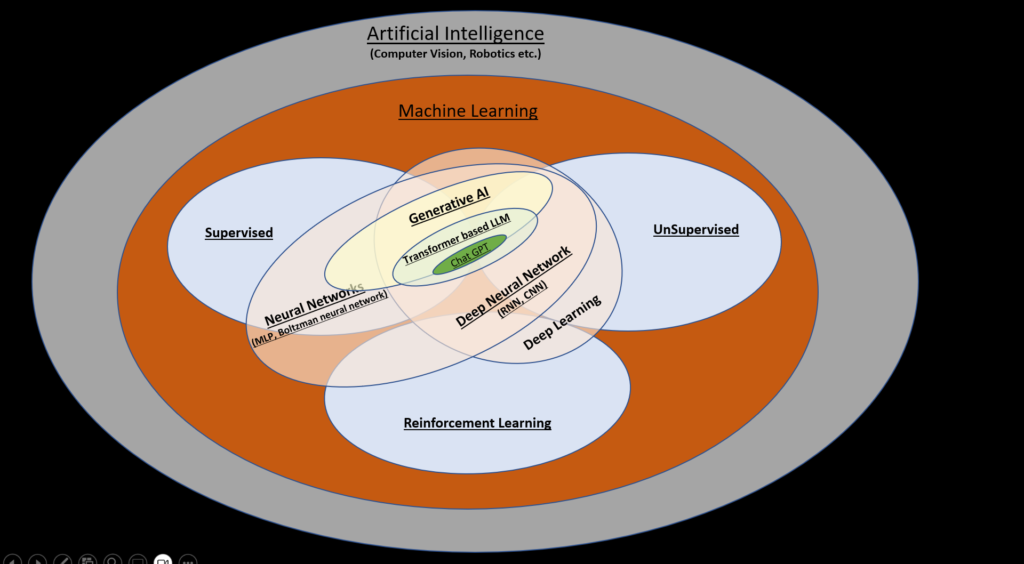

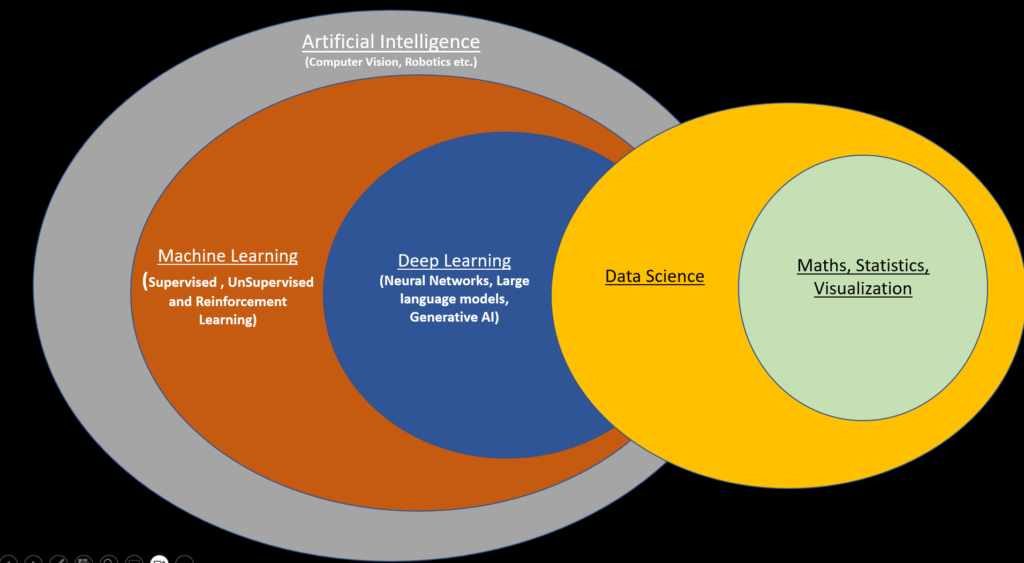

My goal is to explain these terms and the relationships between different AI technologies using a simple diagram and plain language, catering to beginners in the field of AI. The following diagram illustrates the various technologies within the field of AI and their interrelationships.

Artificial intelligence

Artificial Intelligence (AI) refers to the ability of machines to perform tasks that typically require human intelligence, such as understanding natural language, recognizing images, and making decisions. It is a broad field that includes many different techniques and applications, including machine learning, natural language processing, robotics, and computer vision.

AI systems are designed to learn from data and improve their performance over time, making them more effective and efficient at solving complex problems.

The basis of Artificial intelligence is data and insights gained from the data. Data can be in form of structure data (well defined tables) or unstructured data (articles, journals, texts etc.). So it is important to talk about Data Science and its relationship to Artificial intelligence.

Data science is the study of the massive amount of data, which involves extracting meaningful insights from raw, structured, and unstructured data that is processed using the scientific method, different technologies, and algorithms.

It is a multidisciplinary field that uses tools and techniques to manipulate data so that you can find something new and meaningful.

Machine Learning – a subset of AI

Machine Learning is a subset of AI that focuses on building systems that can learn from data without being explicitly programmed. Instead, the system is trained on a large dataset and learns from the patterns it recognizes.

Machine Learning can be divided into three categories:

- Supervised learning: Algorithms in supervised learning learn to make predictions based on labeled data. (Labelled data means the raw data like images, text files, videos, etc. along with one or more meaningful and informative label to provide context so that a machine learning algorithm can learn from it.)

- Unsupervised learning: Algorithms in unsupervised learning learn from unlabeled data to identify patterns or groupings.

- Reinforcement learning: Algorithms in reinforcement learning learn to make decisions based on rewards and punishments.

Deep Learning – a subset of Machine Learning

Deep learning is a subset of Machine Learning that teaches computers to process data in a way that is inspired by the human brain. Deep learning models can recognize complex patterns in pictures, text, sounds, and other data to produce accurate insights and predictions. You can use deep learning methods to automate tasks that typically require human intelligence, such as describing images or transcribing a sound file into text.

Deep Learning focuses on building artificial neural networks that can learn from data. Neural networks are designed to mimic the structure of the human brain.

Neural networks

A neural network is a software solution that leverages machine learning (ML) algorithms to ‘mimic’ the operations of a human brain. Neural networks are also known as artificial neural networks (ANNs).

Artificial neural networks are inspired by the biological neurons within the human body which activate under certain circumstances resulting in a related action performed by the body in response.

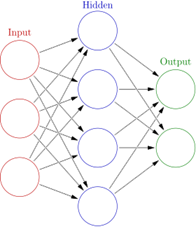

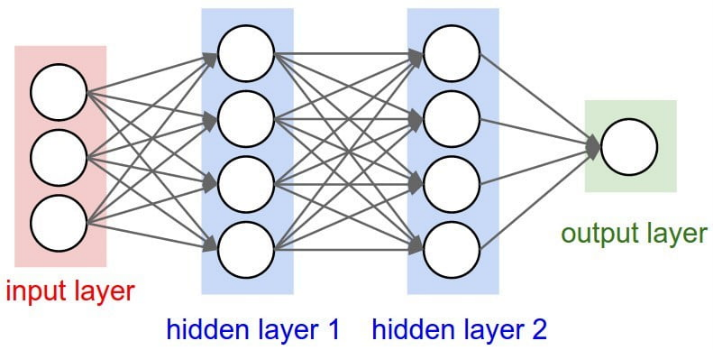

The architecture of a neural network comprises node layers that are distributed across an input layer, single or multiple hidden layers, and an output layer.

Input Layer:

As the name suggests, it accepts inputs in several different formats provided by the programmer.

Hidden Layer:

The hidden layer is present in-between input and output layers. It performs all the calculations to find hidden features and patterns in input.

Output Layer:

The input goes through a series of transformations using the hidden layer, which finally results in output that is conveyed using this layer.

Only One hidden layer neural networks are considered an Artificial Neural Network (ANN)

Deep neural networks, has multiple hidden layers. ‘Deep’ refers to a model’s layers being multiple layers deep.

**Note: In AI, a model is a set of mathematical equations and algorithms that a computer uses to analyze data and make decisions.

Different types of Neural Networks that can be classified depending on their: Structure, Data flow, Neurons used and their density, Layers etc. are:

- Convolutional Neural Networks (CNN)

- Recurrent Neural Networks (RNN)

- Feed Forward Network (FNN)

- Generative adversarial network (GAN)

- Long Short-Term Memory Networks

- Multi-Layer Perceptron

- Radial Basis Networks

I will not cover the details of each here as the article will become long and each of these terms require a separate post of its own. To keep the article concise from the beginner’s standpoint I will keep it concise.

Generative AI – a subset of Deep Learning

Generative AI is a subset of Deep neural networks that focus on building systems that can generate new data, such as images, videos, and audio.

Generative AI is a set of algorithms, capable of generating seemingly new, realistic content—such as text, images, or audio—from the training data. The most powerful generative AI algorithms are built on top of foundation models that are trained on a vast quantity of unlabeled data in a self-supervised way to identify underlying patterns for a wide range of tasks

Generative AI has many applications, such as creating realistic images, generating text, and even creating new music.

As for now, there are two most widely used generative AI models:

Generative Adversarial Networks or GANs — are a powerful class of neural networks that are used for unsupervised learning which can create visual and multimedia artifacts from both imagery and textual input data. It is basically made up of a system of two competing neural network models which compete with each other to analyze, capture and copy the variations within data.

Transformer-based models** — technologies such as Generative Pre-Trained (GPT) language models that can use information gathered on the Internet to create textual content from website articles to press releases to whitepapers. A transformer model is a neural network that learns context and thus the meaning of sentence by tracking relationships in sequential data. Transformers are in many cases replacing convolutional and recurrent neural networks (CNNs and RNNs)

Large Language Model (LLM)

Large Language Models (LLMs) are foundational machine learning (neural networks) model that use deep learning algorithms to process and understand natural language. These models are trained on massive amounts of text data to learn patterns and entity relationships in the language. LLMs can perform many types of language tasks, such as translating languages, analyzing sentiments, chatbot conversations, and more. They can understand complex textual data, identify entities and relationships between them, and generate new text that is coherent and grammatically accurate.

Training LLMs to use the right data requires the use of massive, expensive server farms that act as supercomputers.

GPT-3 ( Generative Pretrained Transformer) is a series of deep learning language models based on transformer based neural network models, built by the OpenAI team — a San Francisco-based artificial intelligence research laboratory. It is an autoregressive model pre-trained on a large corpus of text to generate high-quality natural language text. The 3 here means that this is the third generation of those models. GPT-3 is designed to be flexible and can be fine-tuned for a variety of language tasks, such as language translation, summarization, and question answering.

As mentioned above a transformer is a type of neural network trained to analyze the context of input data and weight the significance of each part of the data accordingly. Since this type of model learns context, it’s commonly used in natural language processing (NLP) to generate text similar to human writing.

Some of the other popular LLM’s you might hear about are:

BERT by Google

GPT-4 by OpenAI

LaMDA by Google

ChatGPT

ChatGPT is a chatbot from OpenAI that enables users to “converse” with it in a way that’s meant to mimic natural conversation. As a user, you can ask questions or make requests in the form of prompts, and ChatGPT will respond.

Prompt Engineering

Prompt engineering is the process of refining interactions with AI systems and providing input to various generative AI services like ChatGPT, to produce optimal responses (generate text or images). A prompt engineer crafts the right question or command that will guide the AI to deliver the most accurate and useful answer.